regularization machine learning mastery

In their 2014 paper Dropout. Machine Learning Mastery Blog.

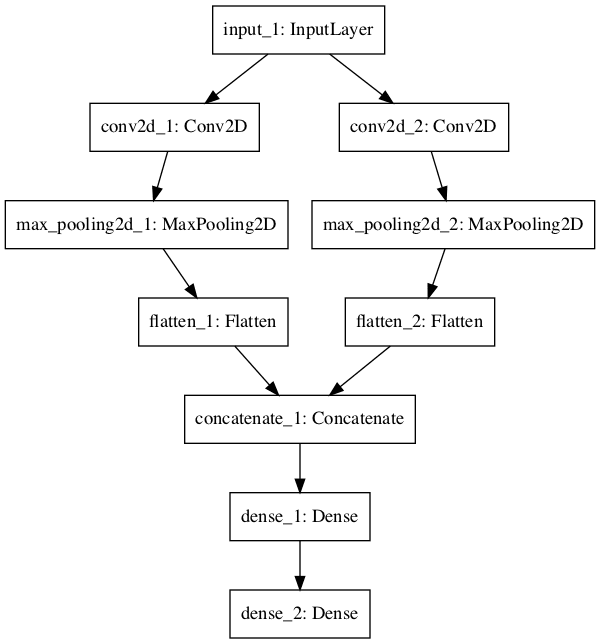

How To Use The Keras Functional Api For Deep Learning

Machine Learning Mastery Contact.

. A Simple Way to Prevent Neural Networks from Overfitting download the PDF. You can contact me with your question but one question at a time please. I write a lot about applied machine learning on the blog try the search feature.

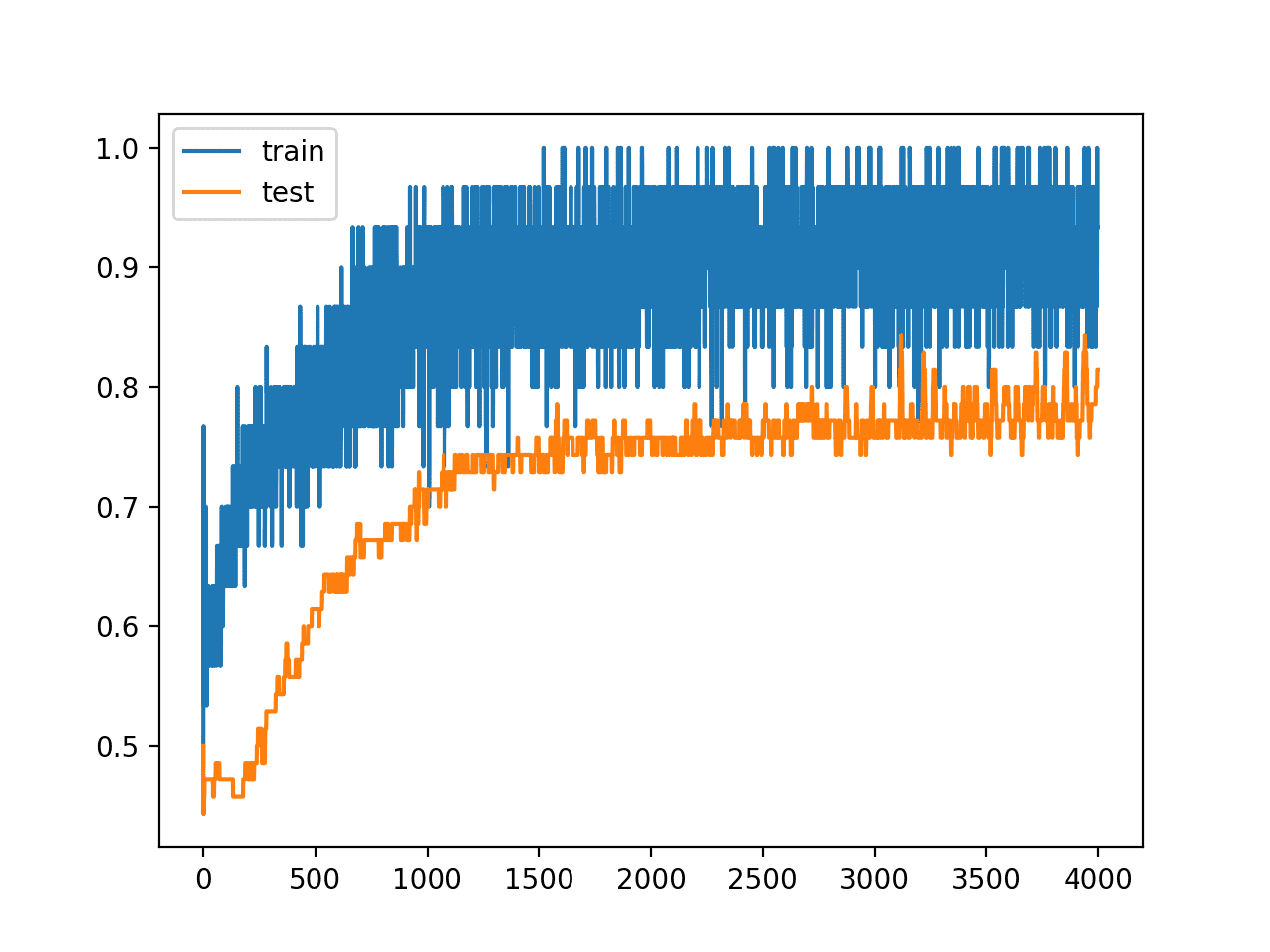

Dropout is a regularization technique for neural network models proposed by Srivastava et al. Dropout is a technique where randomly selected neurons are ignored during training. The most common questions I get and their answers Machine Learning Mastery FAQ.

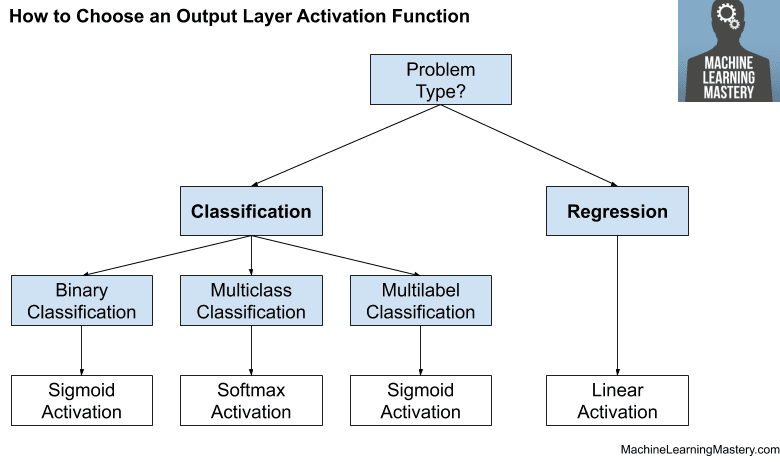

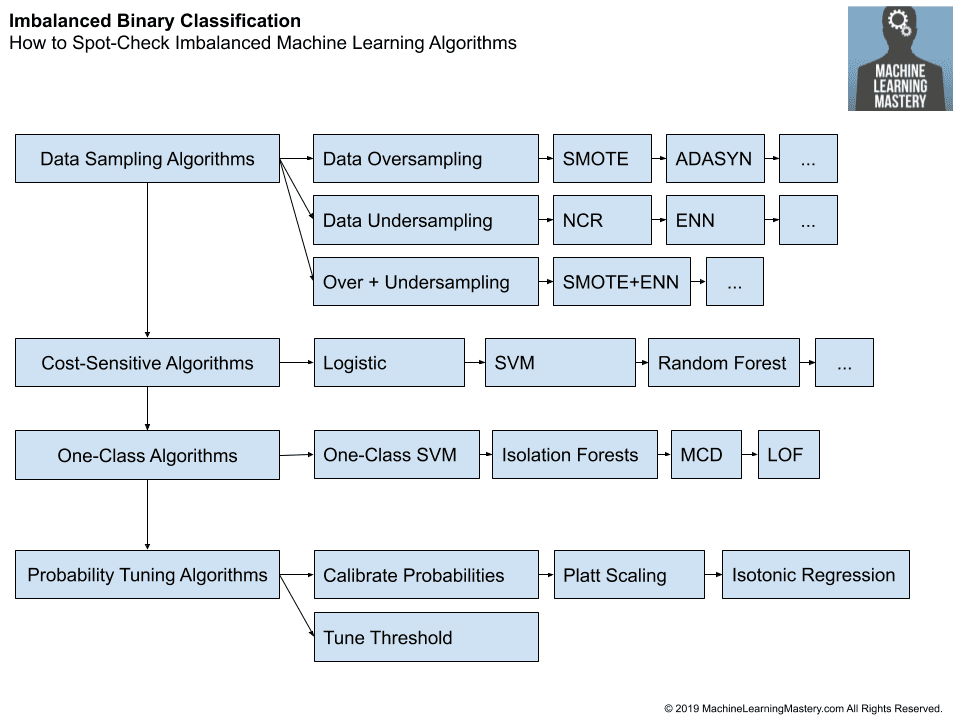

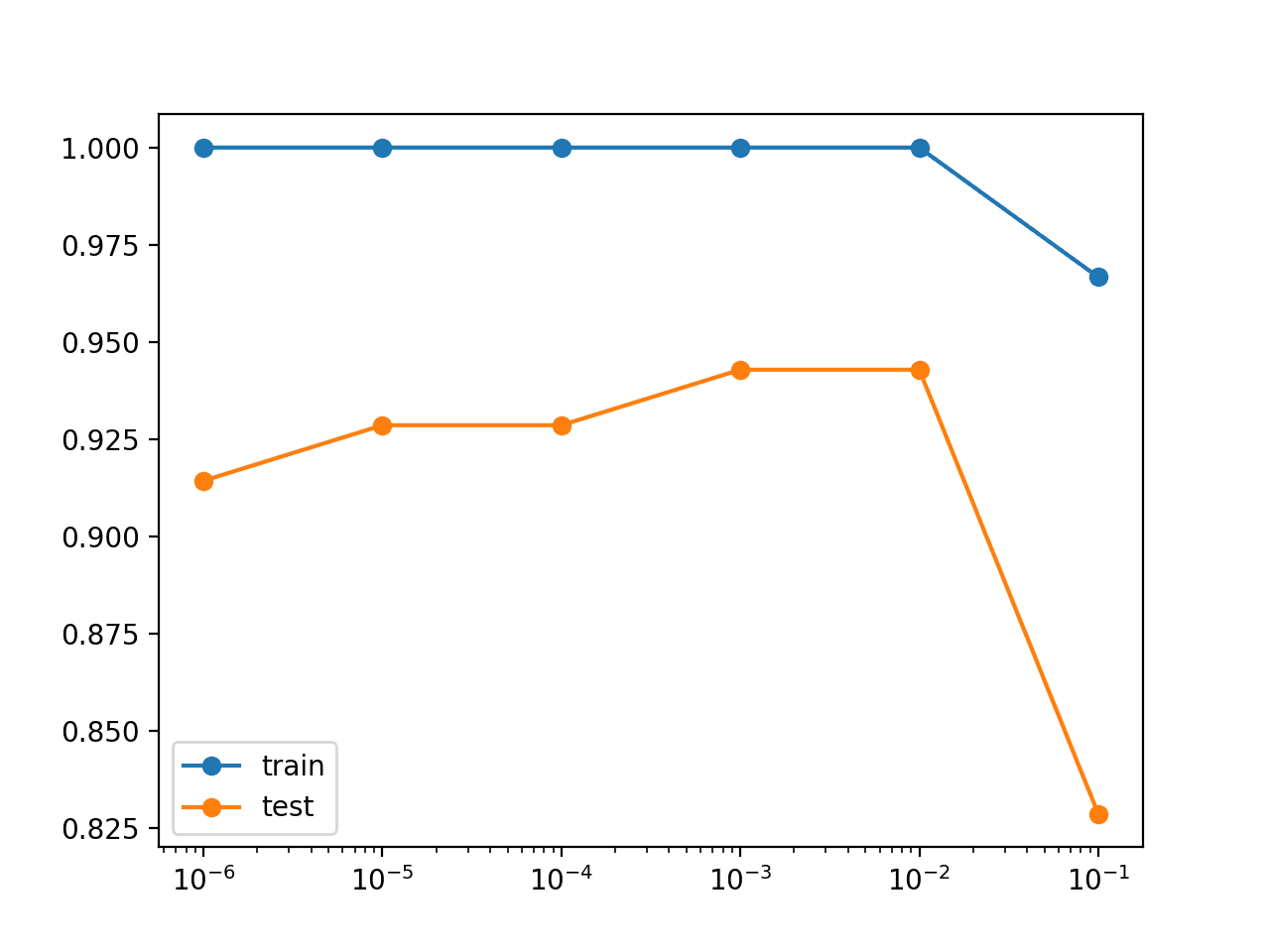

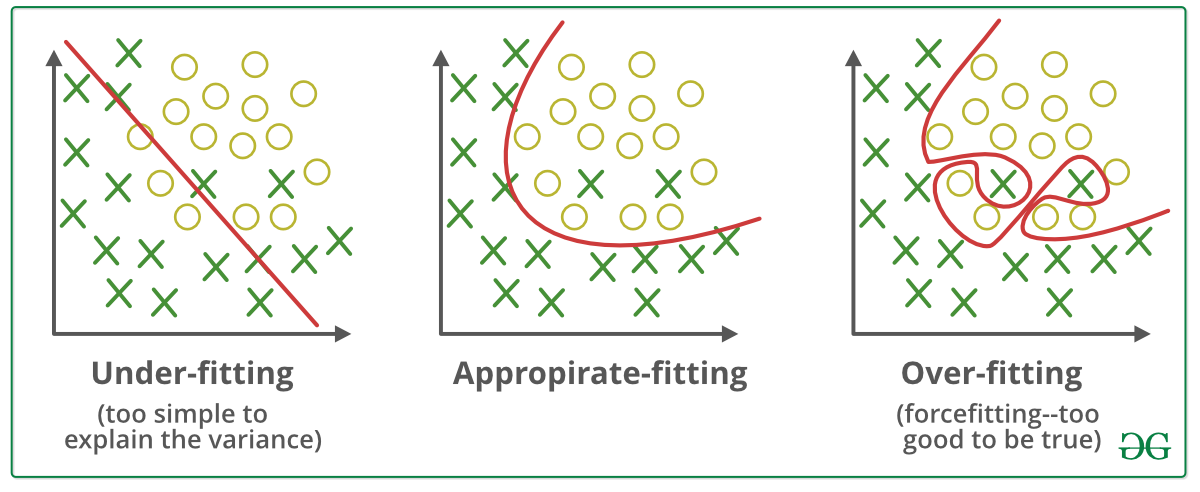

Dropout Regularization For Neural Networks. Regularization is a technique for penalizing large coefficients in order to avoid overfitting and the strength of the penalty should be tuned. In this part we will cover the Big 3 machine learning tasks which are by far the most common ones.

This is Part 1 of this series.

A Tour Of Machine Learning Algorithms

A Tour Of Machine Learning Algorithms

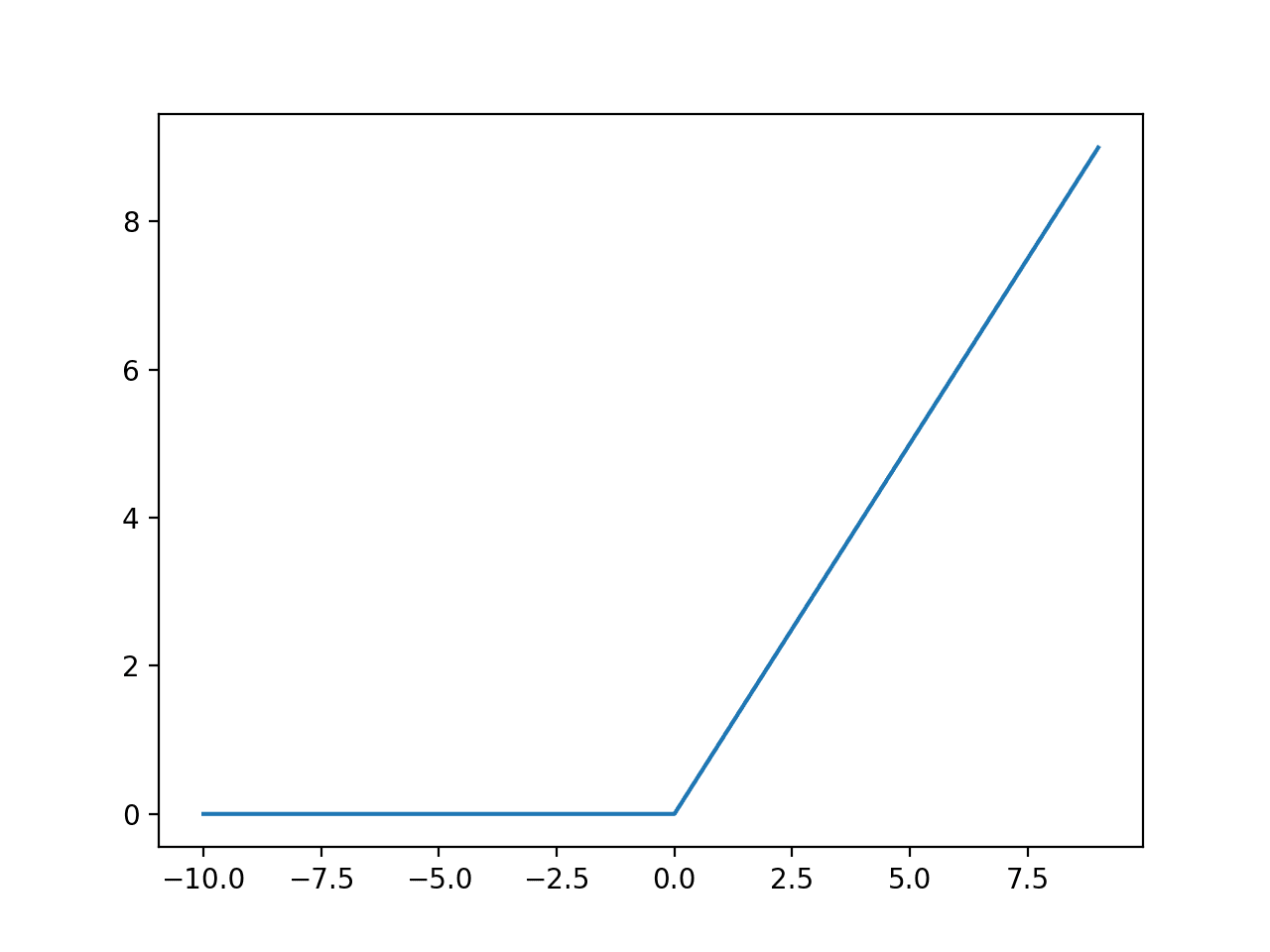

A Gentle Introduction To The Rectified Linear Unit Relu

Issue 4 Out Of The Box Ai Ready The Ai Verticalization Revue

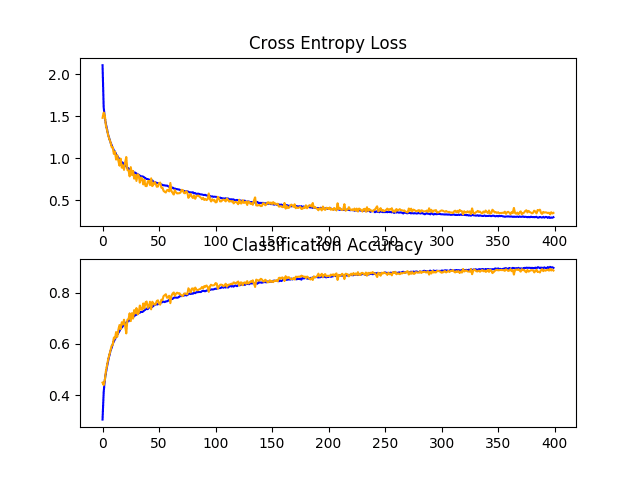

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

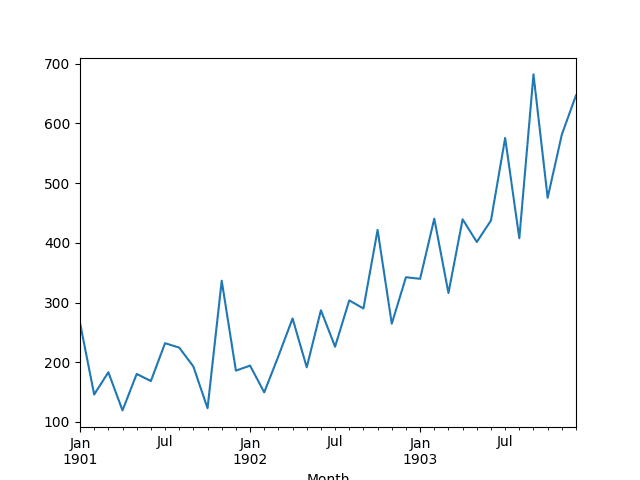

Weight Regularization With Lstm Networks For Time Series Forecasting

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

Machine Learning Algorithms Mindmap Jixta

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

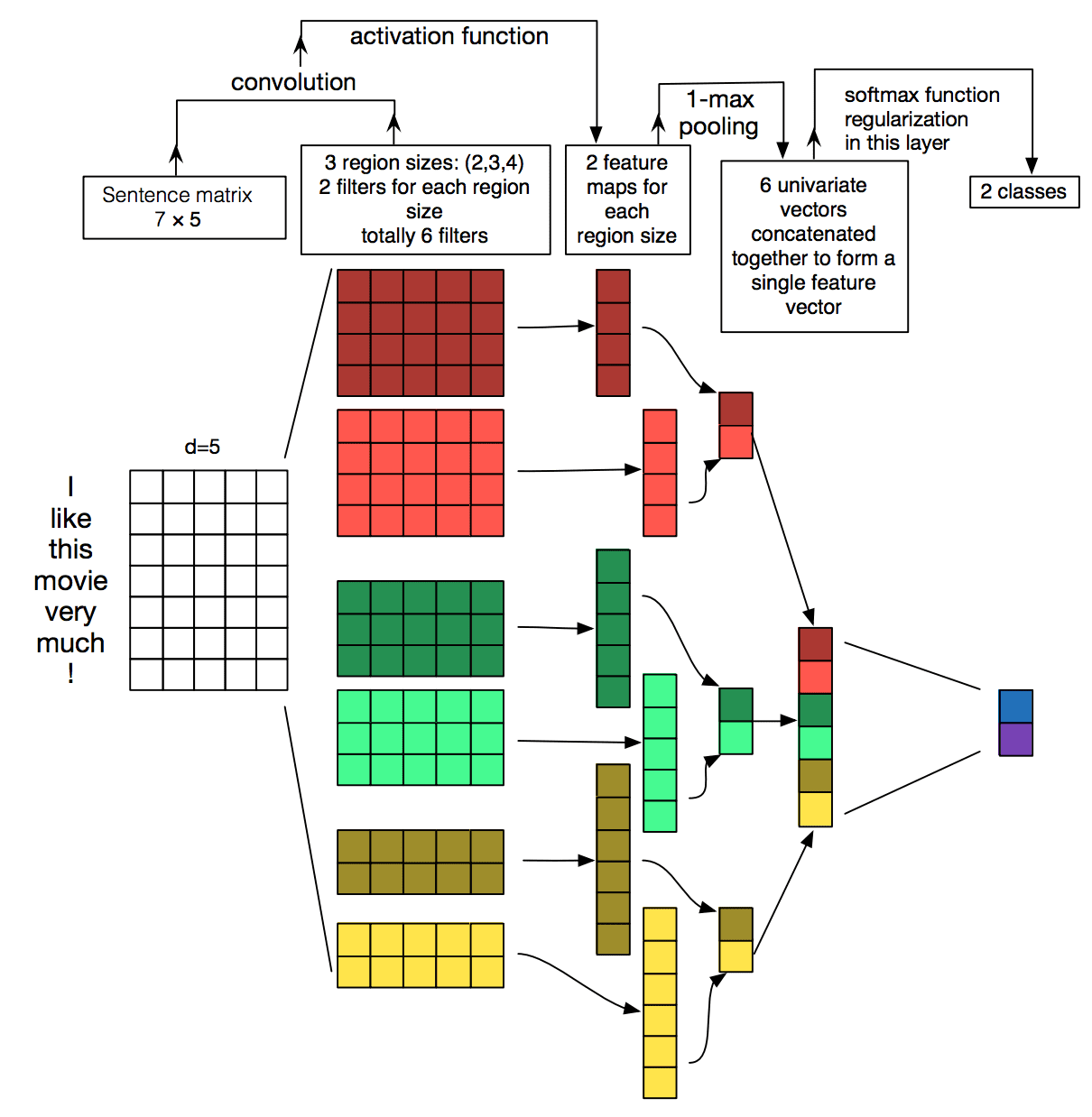

Best Practices For Text Classification With Deep Learning

Machine Learning Mastery With R Get Started Build Accurate Models And Work Through Projects Step By Step Pdf Machine Learning Cross Validation Statistics

Weight Regularization With Lstm Networks For Time Series Forecasting

A Tour Of Machine Learning Algorithms

![]()

Machine Learning Mastery Workshop Enthought Inc